Publications

\* equal contribution

Peer-Reviewed Conference and Journal Papers

2025

-

Grab-n-Go: On-the-Go Microgesture Recognition with Objects in HandChi-Jung Lee, Jiaxin Li, Tianhong Catherine Yu, Ruidong Zhang, Vipin Gunda, François Guimbretière, and Cheng ZhangIn IMWUT, 2025

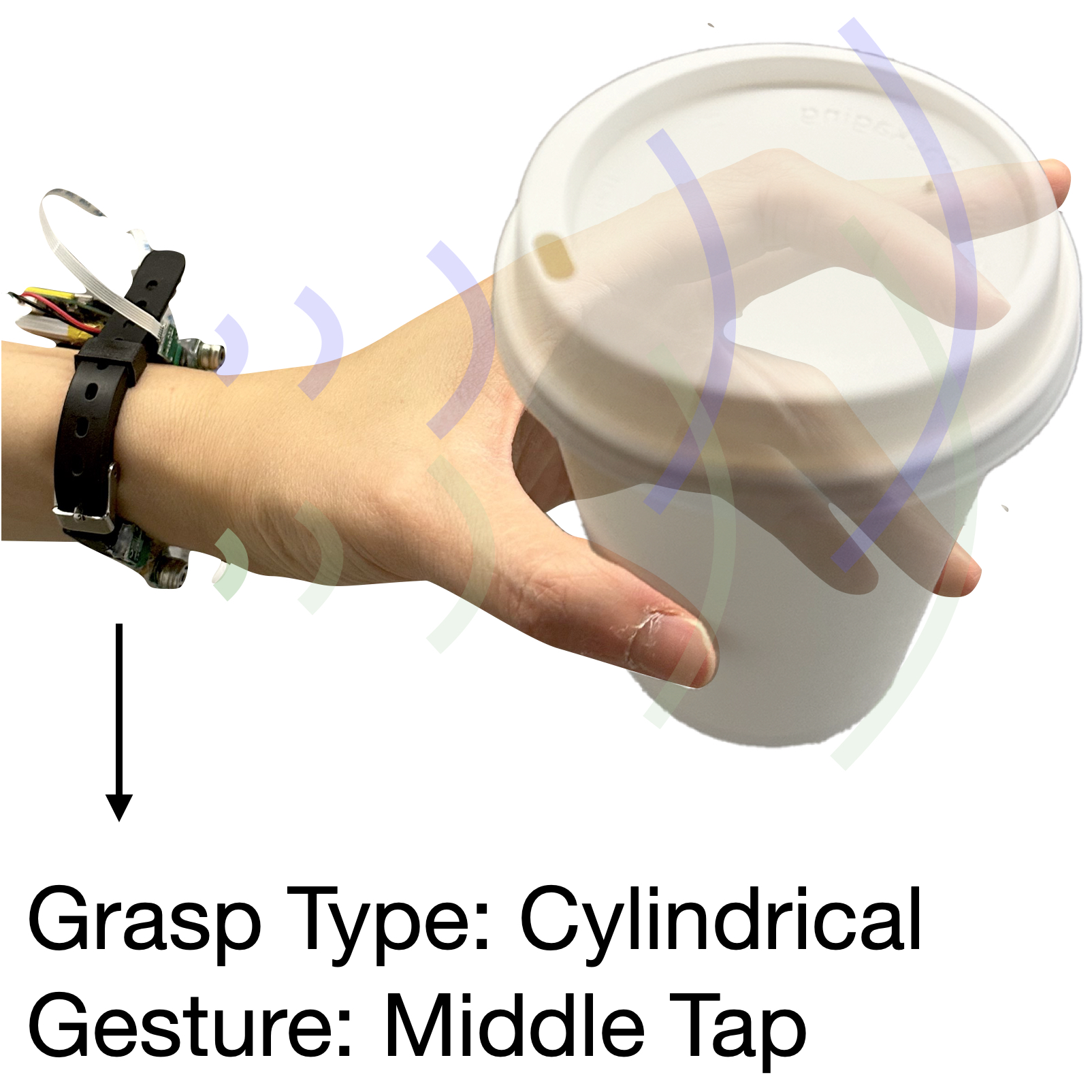

Grab-n-Go: On-the-Go Microgesture Recognition with Objects in HandChi-Jung Lee, Jiaxin Li, Tianhong Catherine Yu, Ruidong Zhang, Vipin Gunda, François Guimbretière, and Cheng ZhangIn IMWUT, 2025As computing devices become increasingly integrated into daily life, there is a growing need for intuitive, always-available interaction methods — even when users’ hands are occupied. In this paper, we introduce Grab-n-Go, the first wearable device that leverages active acoustic sensing to recognize subtle hand microgestures while holding various objects. Unlike prior systems that focus solely on free-hand gestures or basic hand-object activity recognition, Grab-n-Go simultaneously captures information about hand microgestures, grasping poses, and object geometries using a single wristband, enabling the recognition of fine-grained hand movements occurring within activities involving occupied hands. A deep learning framework processes these complex signals to identify 30 distinct microgestures, with 6 microgestures for each of the 5 grasping poses. In a user study with 10 participants and 25 everyday objects, Grab-n-Go achieved an average recognition accuracy of 92.0%. A follow-up study further validated Grab-n-Go’s robustness against 10 more challenging, deformable objects. These results underscore the potential of Grab-n-Go to provide seamless, unobtrusive interactions without requiring modifications to existing objects. The complete dataset, comprising data from 18 participants performing 30 microgestures with 35 distinct objects, is publicly available at https://github.com/cjlisalee/Grab-n-Go_Data with the DOI: https://doi.org/10.7298/7kbd-vv75.

-

SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a RingHyunchul Lim, Nam Anh Dang, Dylan Lee, Tianhong Catherine Yu, Jane Lu, Franklin Mingzhe Li, Yiqi Jin, Yan Ma, Xiaojun Bi, Francois Guimbretiere, and Cheng ZhangIn CHI, 2025

SpellRing: Recognizing Continuous Fingerspelling in American Sign Language using a RingHyunchul Lim, Nam Anh Dang, Dylan Lee, Tianhong Catherine Yu, Jane Lu, Franklin Mingzhe Li, Yiqi Jin, Yan Ma, Xiaojun Bi, Francois Guimbretiere, and Cheng ZhangIn CHI, 2025Fingerspelling is a critical part of American Sign Language (ASL) recognition and has become an accessible optional text entry method for Deaf and Hard of Hearing (DHH) individuals. In this paper, we introduce SpellRing, a single smart ring worn on the thumb that recognizes words continuously fingerspelled in ASL. SpellRing uses active acoustic sensing (via a microphone and speaker) and an inertial measurement unit (IMU) to track handshape and movement, which are processed through a deep learning algorithm using Connectionist Temporal Classification (CTC) loss. We evaluated the system with 20 ASL signers (13 fluent and 7 learners), using the MacKenzie-Soukoref Phrase Set of 1,164 words and 100 phrases. Offline evaluation yielded top-1 and top-5 word recognition accuracies of 82.45% (±9.67%) and 92.42% (±5.70%), respectively. In real-time, the system achieved a word error rate (WER) of 0.099 (±0.039) on the phrases. Based on these results, we discuss key lessons and design implications for future minimally obtrusive ASL recognition wearables.

-

SeamFit: Towards Practical Smart Clothing for Automatic Exercise LoggingTianhong Catherine Yu, Manru Mary Zhang*, Luis Miguel Malenab*, Chi-Jung Lee, Jacky Hao Jiang, Ruidong Zhang, Francois Guimbretiere, and Cheng ZhangIn IMWUT, 2025

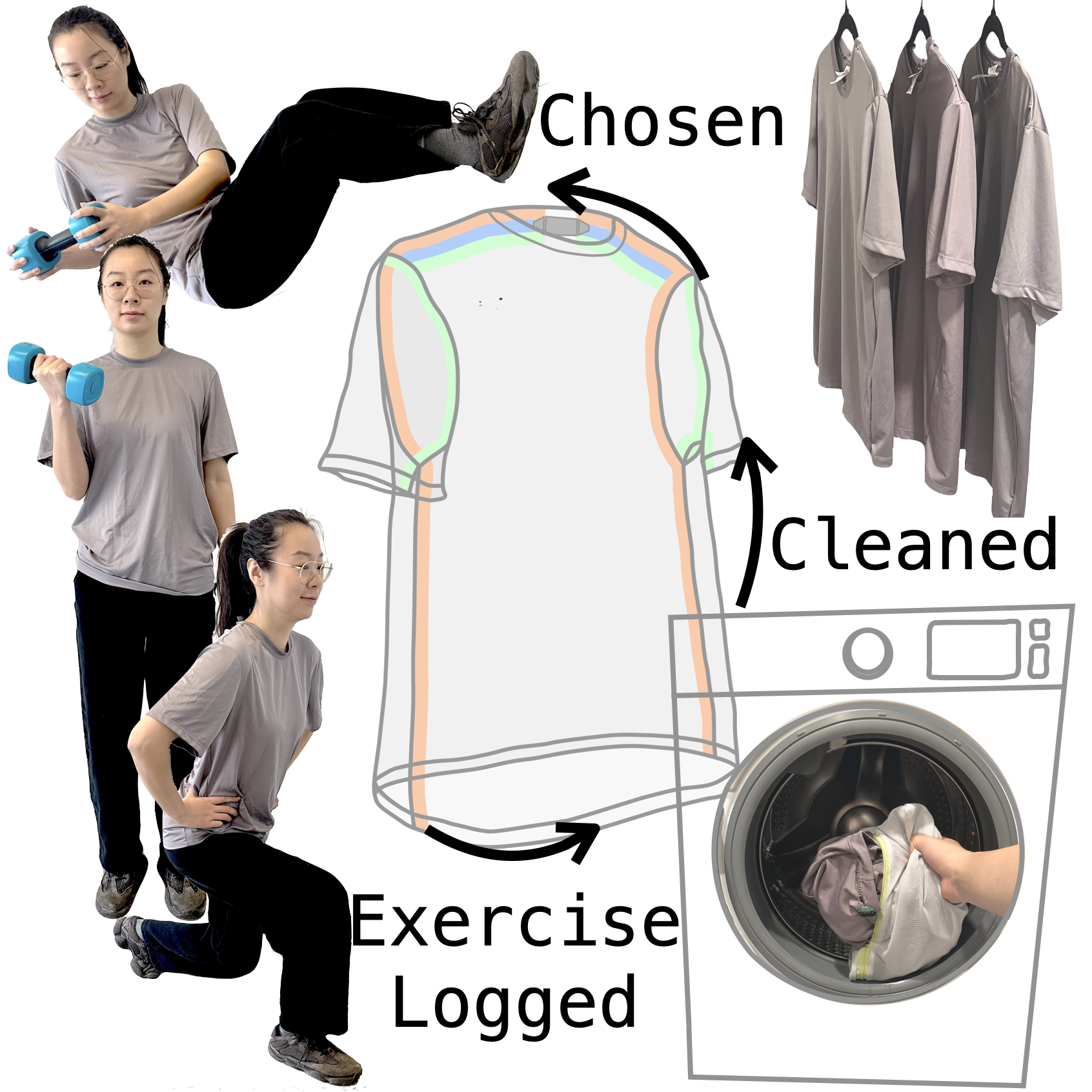

SeamFit: Towards Practical Smart Clothing for Automatic Exercise LoggingTianhong Catherine Yu, Manru Mary Zhang*, Luis Miguel Malenab*, Chi-Jung Lee, Jacky Hao Jiang, Ruidong Zhang, Francois Guimbretiere, and Cheng ZhangIn IMWUT, 2025Smart clothing has exhibited impressive body pose/movement tracking capabilities while preserving the soft, comfortable, and familiar nature of clothing. For practical everyday use, smart clothing should (1) be available in a range of sizes to accommodate different fit preferences, and (2) be washable to allow repeated use. In SeamFit, we demonstrate washable T-shirts, embedded with capacitive seam electrodes, available in three different sizes, for exercise logging. Our T-shirt design, customized signal processing & machine learning pipeline allow the SeamFit system to generalize across users, fits, and wash cycles. Prior wearable exercise logging solutions, which often attach a miniaturized sensor to a body location, struggle to track exercises that mainly involve other body parts. SeamFit T-shirt naturally covers a large area of the body and still tracks exercises that mainly involve uncovered joints (e.g., elbows and the lower body). In a user study with 15 participants performing 14 exercises, SeamFit detects exercises with an accuracy of 89%, classifies exercises with an accuracy of 93.4%, and counts exercises with an error of 0.9 counts, on average. SeamFit is a step towards practical smart clothing for everyday uses.

2024

-

Ring-a-Pose: A Ring for Continuous Hand Pose TrackingTianhong Catherine Yu, Guilin Hu, Ruidong Zhang, Hyunchul Lim, Saif Mahmud, Chi-Jung Lee, Ke Li, Devansh Agarwal, Shuyang Nie, Jinseok Oh, Francois Guimbretiere, and Cheng ZhangIn IMWUT, 2024

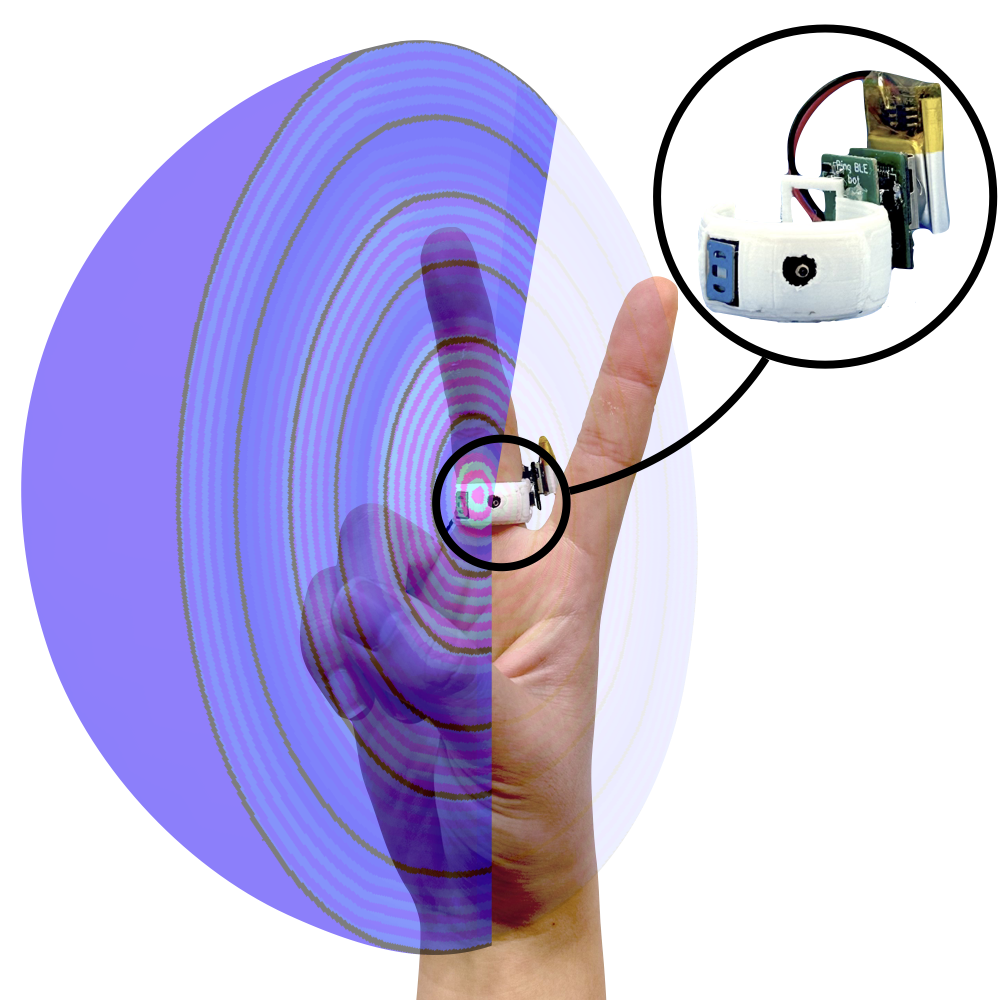

Ring-a-Pose: A Ring for Continuous Hand Pose TrackingTianhong Catherine Yu, Guilin Hu, Ruidong Zhang, Hyunchul Lim, Saif Mahmud, Chi-Jung Lee, Ke Li, Devansh Agarwal, Shuyang Nie, Jinseok Oh, Francois Guimbretiere, and Cheng ZhangIn IMWUT, 2024We present Ring-a-Pose, a single untethered ring that tracks continuous 3D hand poses. Located in the center of the hand, the ring emits an inaudible acoustic signal that each hand pose reflects differently. Ring-a-Pose imposes minimal obtrusions on the hand, unlike multi-ring or glove systems. It is not affected by the choice of clothing that may cover wrist-worn systems. In a series of three user studies with a total of 36 participants, we evaluate Ring-a-Pose’s performance on pose tracking and micro-finger gesture recognition. Without collecting any training data from a user, Ring-a-Pose tracks continuous hand poses with a joint error of 14.1mm. The joint error decreases to 10.3mm for fine-tuned user-dependent models. Ring-a-Pose recognizes 7-class micro-gestures with a 90.60% and 99.27% accuracy for user-independent and user-dependent models, respectively. Furthermore, the ring exhibits promising performance when worn on any finger. Ring-a-Pose enables the future of smart rings to track and recognize hand poses using relatively low-power acoustic sensing.

-

SeamPose: Repurposing Seams as Capacitive Sensors in a Shirt for Upper-Body Pose TrackingTianhong Catherine Yu, Manru Mary Zhang*, Peter He*, Chi-Jung Lee, Cassidy Cheesman, Saif Mahmud, Ruidong Zhang, Francois Guimbretiere, and Cheng ZhangIn UIST, 2024

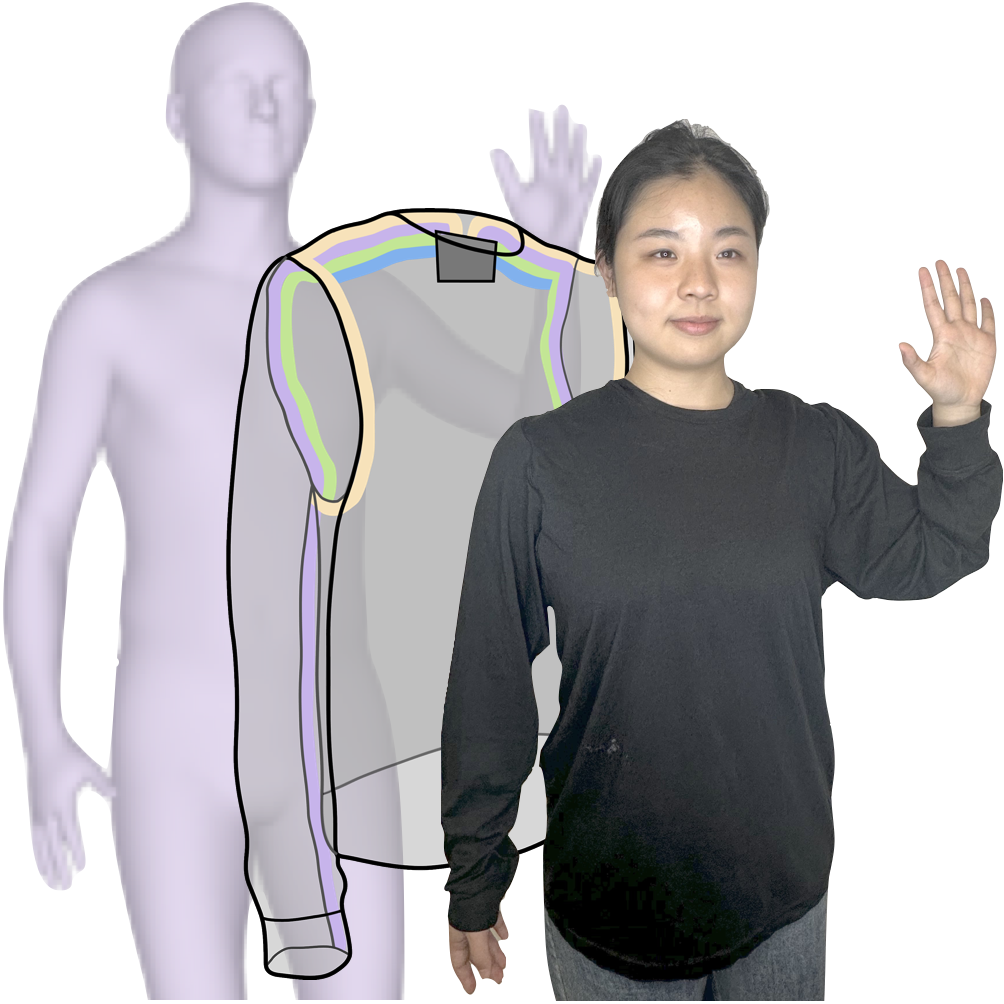

SeamPose: Repurposing Seams as Capacitive Sensors in a Shirt for Upper-Body Pose TrackingTianhong Catherine Yu, Manru Mary Zhang*, Peter He*, Chi-Jung Lee, Cassidy Cheesman, Saif Mahmud, Ruidong Zhang, Francois Guimbretiere, and Cheng ZhangIn UIST, 2024Seams are areas of overlapping fabric formed by stitching two or more pieces of fabric together in the cut-and-sew apparel manufacturing process. In SeamPose, we repurposed seams as capacitive sensors in a shirt for continuous upper-body pose estimation. Compared to previous all-textile motion-capturing garments that place the electrodes on the clothing surface, our solution leverages existing seams inside of a shirt by machine-sewing insulated conductive threads over the seams. The unique invisibilities and placements of the seams afford the sensing shirt to look and wear similarly as a conventional shirt while providing exciting pose-tracking capabilities. To validate this approach, we implemented a proof-of-concept untethered shirt with 8 capacitive sensing seams. With a 12-participant user study, our customized deep-learning pipeline accurately estimates the relative (to the pelvis) upper-body 3D joint positions with a mean per joint position error (MPJPE) of 6.0 cm. SeamPose represents a step towards unobtrusive integration of smart clothing for everyday pose estimation.

-

EchoWrist: Continuous Hand Pose Tracking and Hand-Object Interaction Recognition Using Low-Power Active Acoustic Sensing On a WristbandChi-Jung Lee*, Ruidong Zhang*, Devansh Agarwal, Tianhong Catherine Yu, Vipin Gunda, Oliver Lopez, James Kim, Sicheng Yin, Boao Dong, Ke Li, Mose Sakashita, Francois Guimbretiere, and Cheng ZhangIn CHI, 2024

EchoWrist: Continuous Hand Pose Tracking and Hand-Object Interaction Recognition Using Low-Power Active Acoustic Sensing On a WristbandChi-Jung Lee*, Ruidong Zhang*, Devansh Agarwal, Tianhong Catherine Yu, Vipin Gunda, Oliver Lopez, James Kim, Sicheng Yin, Boao Dong, Ke Li, Mose Sakashita, Francois Guimbretiere, and Cheng ZhangIn CHI, 2024Our hands serve as a fundamental means of interaction with the world around us. Therefore, understanding hand poses and interaction contexts is critical for human-computer interaction (HCI). We present EchoWrist, a low-power wristband that continuously estimates 3D hand poses and recognizes hand-object interactions using active acoustic sensing. EchoWrist is equipped with two speakers emitting inaudible sound waves toward the hand. These sound waves interact with the hand and its surroundings through reflections and diffractions, carrying rich information about the hand’s shape and the objects it interacts with. The information captured by the two microphones goes through a deep learning inference system that recovers hand poses and identifies various everyday hand activities. Results from the two 12-participant user studies show that EchoWrist is effective and efficient at tracking 3D hand poses and recognizing hand-object interactions. Operating at 57.9 mW, EchoWrist can continuously reconstruct 20 3D hand joints with MJEDE of 4.81 mm and recognize 12 naturalistic hand-object interactions with 97.6% accuracy.

2023

-

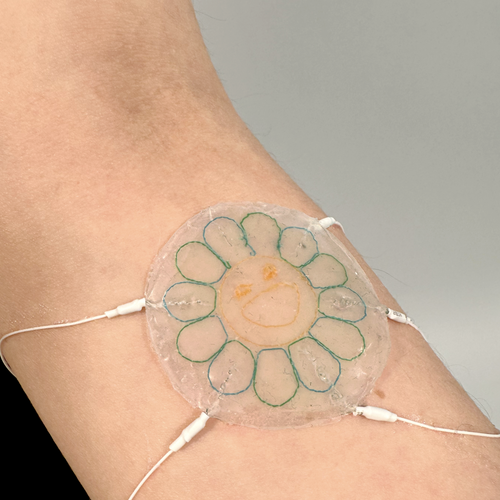

Skinergy: Machine-Embroidered Silicone-Textile Composites as On-Skin Self-Powered Input SensorsTianhong Catherine Yu, Nancy Wang*, Sarah Ellenbogen*, and Cindy Hsin-Liu KaoIn UIST, 2023

Skinergy: Machine-Embroidered Silicone-Textile Composites as On-Skin Self-Powered Input SensorsTianhong Catherine Yu, Nancy Wang*, Sarah Ellenbogen*, and Cindy Hsin-Liu KaoIn UIST, 2023We propose Skinergy for self-powered on-skin input sensing, a step towards prolonged on-skin device usages. In contrast to prior on-skin gesture interaction sensors, Skinergy’s sensor operation does not require external power. Enabled by the triboelectric nanogenerator (TENG) phenomenon, the machine-embroidered silicone-textile composite sensor converts mechanical energy from the input interaction into electrical energy. Our proof-of-concept untethered sensing system measures the voltages of generated electrical signals which are then processed for a diverse set of sensing tasks: discrete touch detection, multi-contact detection, contact localization, and gesture recognition. Skinergy is fabricated with off-the-shelf materials. The aesthetic and functional designs can be easily customized and digitally fabricated. We characterize Skinergy and conduct a 10-participant user study to (1) evaluate its gesture recognition performance and (2) probe user perceptions and potential applications. Skinergy achieves 92.8 percent accuracy for a 11-class gesture recognition task. Our findings reveal that human factors (e.g., individual differences in skin properties, and aesthetic preferences) are key considerations in designing self-powered on-skin sensors for human inputs.

-

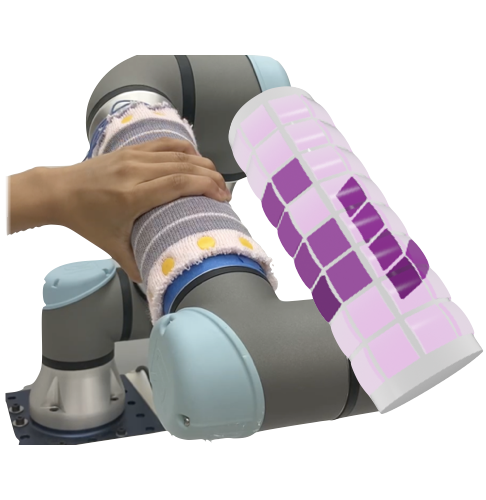

RobotSweater: Scalable, Generalizable, and Customizable Machine-Knitted Tactile Skins for RobotsZilin Si*, Tianhong Catherine Yu*, Katrene Morozov, James McCann, and Wenzhen YuanIn ICRA, 2023

RobotSweater: Scalable, Generalizable, and Customizable Machine-Knitted Tactile Skins for RobotsZilin Si*, Tianhong Catherine Yu*, Katrene Morozov, James McCann, and Wenzhen YuanIn ICRA, 2023Tactile sensing is essential for robots to perceive and react to the environment. However, it remains a challenge to make large-scale and flexible tactile skins on robots. Industrial machine knitting provides solutions to manufacture customiz-able fabrics. Along with functional yarns, it can produce highly customizable circuits that can be made into tactile skins for robots. In this work, we present RobotSweater, a machine-knitted pressure-sensitive tactile skin that can be easily applied on robots. We design and fabricate a parameterized multi-layer tactile skin using off-the-shelf yarns, and characterize our sensor on both a flat testbed and a curved surface to show its robust contact detection, multi-contact localization, and pressure sensing capabilities. The sensor is fabricated using a well-established textile manufacturing process with a programmable industrial knitting machine, which makes it highly customizable and low-cost. The textile nature of the sensor also makes it easily fit curved surfaces of different robots and have a friendly appearance. Using our tactile skins, we conduct closed-loop control with tactile feedback for two applications: (1) human lead-through control of a robot arm, and (2) human-robot interaction with a mobile robot.

-

uKnit: A Position-Aware Reconfigurable Machine-Knitted Wearable for Gestural Interaction and Passive Sensing using Electrical Impedance TomographyTianhong Catherine Yu, Riku Arakawa, James McCann, and Mayank GoelIn CHI, 2023

uKnit: A Position-Aware Reconfigurable Machine-Knitted Wearable for Gestural Interaction and Passive Sensing using Electrical Impedance TomographyTianhong Catherine Yu, Riku Arakawa, James McCann, and Mayank GoelIn CHI, 2023A scarf is inherently reconfigurable: wearers often use it as a neck wrap, a shawl, a headband, a wristband, and more. We developed uKnit, a scarf-like soft sensor with scarf-like reconfigurability, built with machine knitting and electrical impedance tomography sensing. Soft wearable devices are comfortable and thus attractive for many human-computer interaction scenarios. While prior work has demonstrated various soft wearable capabilities, each capability is device- and location-specific, being incapable of meeting users’ various needs with a single device. In contrast, uKnit explores the possibility of one-soft-wearable-for-all. We describe the fabrication and sensing principles behind uKnit, demonstrate several example applications, and evaluate it with 10-participant user studies and a washability test. uKnit achieves 88.0percent/78.2percent accuracy for 5-class worn-location detection and 80.4percent/75.4percent accuracy for 7-class gesture recognition with a per-user/universal model. Moreover, it identifies respiratory rate with an error rate of 1.25 bpm and detects binary sitting postures with an average accuracy of 86.2 percent.

2020

-

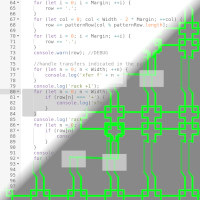

Coupling Programs and Visualization for Machine KnittingTianhong Catherine Yu, and James McCannIn SCF, 2020

Coupling Programs and Visualization for Machine KnittingTianhong Catherine Yu, and James McCannIn SCF, 2020To effectively program knitting machines, like any fabrication machine, users must be able to place the code they write in correspondence with the output the machine produces. This mapping is used in the code-to-output direction to understand what their code will produce, and in the output-to-code direction to debug errors in the finished product. In this paper, we describe and demonstrate an interface that provides two-way coupling between high- or low-level knitting code and a topological visualization of the knitted output. Our system allows the user to locate the knitting machine operations generated by any selected code, as well as the code that generates any selected knitting machine operation. This link between the code and visualization has the potential to reduce the time spent in design, implementation, and debugging phases, and save material costs by catching errors before actually knitting the object. We show examples of patterns designed using our tool and describe common errors that the tool catches when used in an academic lab setting and an undergraduate course.

LBWs, Workshops

-

Million Eyes on the ’Robot Umps’: The Case for Studying Sports in HRI Through BaseballWaki Kamino, Andrea W. Wen-Yi, Dhruv Agarwal, Sil Hamilton, Eun Jeong Kang, Jieun Kim, Keigo Kusumegi, Pegah Moradi, Daniel Mwesigwa, Yan Tao, I-Ting Tsai, Ethan Yang, Shengqi Zhu, Shu-Jung Han, Chi-Jung Lee, Michael Joseph Sack, Tianhong Catherine Yu, Weslie Khoo, Andy Elliot Ricci, Yoyo Tsung-Yu Hou, Boyoung Kim, Selma šabanović, David J. Crandall, Karen Levy, and Malte F. JungIn HRI LBW, 2025

Million Eyes on the ’Robot Umps’: The Case for Studying Sports in HRI Through BaseballWaki Kamino, Andrea W. Wen-Yi, Dhruv Agarwal, Sil Hamilton, Eun Jeong Kang, Jieun Kim, Keigo Kusumegi, Pegah Moradi, Daniel Mwesigwa, Yan Tao, I-Ting Tsai, Ethan Yang, Shengqi Zhu, Shu-Jung Han, Chi-Jung Lee, Michael Joseph Sack, Tianhong Catherine Yu, Weslie Khoo, Andy Elliot Ricci, Yoyo Tsung-Yu Hou, Boyoung Kim, Selma šabanović, David J. Crandall, Karen Levy, and Malte F. JungIn HRI LBW, 2025In this position paper, we argue that baseball-and sports more broadly-provide a unique and under-explored opportunity for researchers to study human-robot interaction (HRI) in real-world settings. Using the rise of robot umpires in baseball as a primary example, we examine emerging themes such as power dynamics among players and umpires, labor implications, and technical challenges. We emphasize the affordances and benefits of studying sports within HRI, including the integration of interdisciplinary perspectives, the large-scale deployment of robots, and the examination of their role in deeply rooted cultural practices.

-

Democratizing Intelligent Soft WearablesCedric Honnet, Tianhong Catherine Yu, Irmandy Wicaksono, Tingyu Cheng, Andreea Danielescu, Cheng Zhang, Stefanie Mueller, Joe Paradiso, and Yiyue LuoIn UIST Workshop, 2024

Democratizing Intelligent Soft WearablesCedric Honnet, Tianhong Catherine Yu, Irmandy Wicaksono, Tingyu Cheng, Andreea Danielescu, Cheng Zhang, Stefanie Mueller, Joe Paradiso, and Yiyue LuoIn UIST Workshop, 2024Wearables have long been integral to human culture and daily life. Recent advances in intelligent soft wearables have dramatically transformed how we interact with the world, enhancing our health, productivity, and overall well-being. These innovations, combining advanced sensor design, fabrication, and computational power, offer unprecedented opportunities for monitoring, assistance, and augmentation. However, the benefits of these advancements are not yet universally accessible. Economic and technical barriers often limit the reach of these technologies to domain-specific experts. There is a growing need for democratizing intelligent wearables that are scalable, seamlessly integrated, customized, and adaptive. By bringing researchers from relevant disciplines together, this workshop aims to identify the challenges and investigate opportunities for democratizing intelligent soft wearables within the HCI community via interactive demos, invited keynotes, and focused panel discussions.